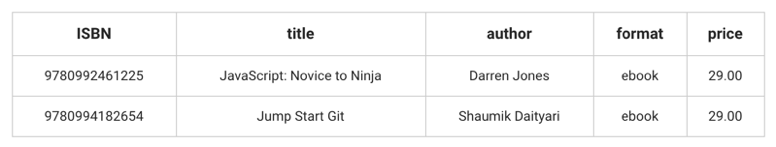

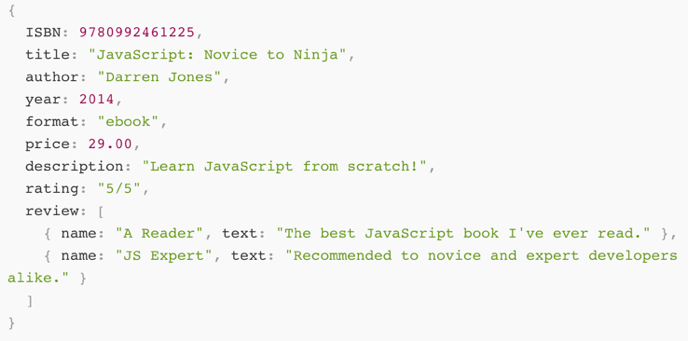

class: center, middle # Elasticsearch ## Introduction --- ## Today * Intro to Elasticsearch -- * CRUD operations with ES -- ## Tomorrow -- * Advanced ES features -- * Integrating ES into a Spring application --- ## Use cases * Advanced search * Full-text search * Online stores (e.g. "suggested for you" features) * Indexing very large data sets -- * Analytics - for very large datasets -- * **Not** for primary datastore (data loss/corruption) --- ## Relational Databases * Examples: MySQL, PostgreSQL, SQLite -- * Defined schema w/ several tables per database or schema -- * Allows you to set restrictions, run `JOIN` queries, avoid consistency issues -- * Data is "normalized" to reduce redundancy and update issues  --- ## Non-Relational (NoSQL) Databases * Examples: MongoDB, CouchDB, Redis, Apache Cassandra -- * Flexible, dynamic table design -- * Fast, can be distributed -- * No defined schema --- ## NoSQL Document Data is stored as documents, rather than rows in tables  --- ## JSON <div class = "left-column" > <ul> <li>JavaScript Object Notation <li>Data is text <li>Converts to a JS object simply, quickly, no parsing <li>Key:Value pairs make it easy to understand </div> <div class = "right-column"> <img src = "images/JSONexample.png"> </div> --- ## Lucene Elasticsearch is built on Lucene, a search library written in Java. -- * Stores data in an **index** of documents * Each **document** has **fields** * For now, think of an index as the analog of a database in a relational database -- * Inverted indexes - like in back of textbook (faster to search, slower to write) -- * Allows fields to be weighted using the `boost` parameter -- * Lucene Query Syntax --- ## Talking To Elasticsearch With Java, we can talk to Elasticsearch via: -- * **Node client** - The node client joins a local cluster as a non-data node. In other words, it doesn’t hold any data itself. -- * **Transport client** - Can be used to send requests to a remote cluster. It doesn’t join the cluster itself, but simply forwards requests to a node in the cluster. -- > Both of these clients communicate on port 9300 --- ## REST API For other scenarios, Elasticsearch provides a REST API that communicates using JSON over HTTP. We will predominantly use this API to talk to ES. -- - Available on port 9200 -- - We'll use the `curl` command to access the API via the command line --- ## curl Basics The generic `curl` usages looks like: ```nohighlight $ curl -X<VERB> <OPTIONS> '<URL>' -d '<BODY>' ``` -- <br> The components are: - `VERB` - the HTTP verb to use (`GET`, `POST`, etc); default is `GET` - `OPTIONS` - `curl` options; the most common for us will be `-H 'Content-type: application/json'` (to set the `Content-type` HTTP header) - `URL` - the endpoint to make the request to - `BODY` - the request body (ommitted for `GET` requests); this can be split across lines --- ## Let's use Elasticsearch! -- Install ES and it's partner, Kibana, via Homebrew: -- ``` $ brew install elasticsearch $ brew install kibana ``` -- Start both of the apps as services: ``` $ brew services start elasticsearch $ brew services start kibana ``` --- ## Verify that it's running ``` $ curl localhost:9200 ``` You should receive a response containing info about the ES instance. ``` { "name" : "nPGurEK", "cluster_name" : "elasticsearch_chris", "cluster_uuid" : "08zrCrAiQwOEHAnByAIyLw", "version" : { "number" : "6.5.2", "build_flavor" : "default", ... }, "tagline" : "You Know, for Search" } ``` --- ## The _cat API The endpoint `/_cat` provides a list of metadata endpoints that we can access. ``` $ curl localhost:9200/_cat =^.^= /_cat/allocation /_cat/shards /_cat/shards/{index} /_cat/master /_cat/nodes /_cat/tasks /_cat/indices /_cat/indices/{index} [content ommitted] ``` --- ## The _cat API What does this return? ``` $ curl localhost:9200/_cat/indices ``` --- ## Indices * An index is a collection of similar documents (books, people) -- * Each piece of data is a document (like one record in SQL, e.g. a specific book) -- * Each document is made up of fields, sometimes called properties -- * Each field has a type, just like in SQL (integer, string, etc) -- * The types interact with searches. For instance, a geo distance query can only be run on a `geo_point` field --- ## ES versus Relational Databases A rough parallel can be drawn: Relational DB ⇒ Databases ⇒ Tables ⇒ Rows ⇒ Columns Elasticsearch ⇒ Indices ⇒ Types ⇒ Documents ⇒ Fields --- ## Creating Documents To create a document, `POST` to a URL of the form `/<INDEX>/<TYPE>` with the document as the request body: -- ``` $ curl -XPOST 'localhost:9200/library/books' -H 'Content-type: application/json' -d ' { "title": "Infinite Jest", "author": "David Foster Wallace" }' ``` --- ## The Response ``` { "_index": "library", "_type": "books", "_id": "h9FPyGcBWCX-HNnRrdF4", "_version": 1, "result": "created", "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 0, "_primary_term": 1 } ``` --- ## A New Index Again, try: ``` $ curl localhost:9200/_cat/indices ``` --- # Shorthand Rather than writing out the full `curl` command, we'll often use: ```json POST /library/books?pretty { "title": "Infinite Jest", "author": "David Foster Wallace" } ``` when we mean: ``` $ curl -XPOST 'localhost:9200/library/books?pretty' -H 'Content-type: application/json' -d ' { "title": "The Overstory", "author": "Richard Powers" }' ``` --- # Other Index Endpoints With your knowledge of REST, see if you can figure out how to **retrieve** and **delete** a document. **Tip**: Use the `?pretty` param to get nicely formatted output -- ``` GET /library/books/<ID>?pretty ``` ``` DELETE /library/books/<ID>?pretty ``` --- # Basic Searching Conduct a search via the `_search` endpoint for the index: ``` GET /library/_search?q=title:overstory ``` **Note**: The `pretty` parameter doesn't play nicely with this endpoint. --- # Search Results ``` { "took" : 1, "timed_out" : false, "_shards" : { "total" : 5, "successful" : 5, "skipped" : 0, "failed" : 0 }, "hits" : { "total" : 1, "max_score" : 1.0, "hits" : [ [results ommitted] ] } } ``` --- # Search Results The response tells us: * `hits` - search results info * `hits.hits` - actual array of search results Note that only 10 results are returned by default. We can customize the result set using the `from` and `size` parameters. --- ## Better Search Before we explore some better search methods, let's start using Kibana to query Elasticsearch. -- In your browser, go to [http://localhost:5601](http://localhost:5601) One you are there, select the **Dev Tools** view from the left-hand menu --- ## Better Search We can use the body of a `GET` request to submit more sophisticated search criteria. For example: ``` GET /library/_search { "query": { "match_all": {} } } ``` --- ## Full-Text Queries **Full text queries** are used to run searches on text fields like: email body, description of item ### Examples `match`, `multi_match`, `match_phrase` -- They apply analyzers. (What's an analyzer?!) --- ## What's an Analyzer? An analyzer: * Break text content down into word stems (known as tokens or terms) * Those tokens are added to the inverted index * Words like "the" and "and" are skipped -- * Can be customized for each text field in a mapping -- * ES includes an English default analyzer --- ## Searching By Field ``` GET /library/_search { "query" : { "match" : { "title" : "overstory" } } } ``` --- ## Aggregations * Aggregations allow you to group and provide statistics about your data -- * ES can return hits and aggregated results in _one_ response -- * Hits and aggregations are listed separately in the response --- ## Aggregations With results: ``` GET /library/_search { "aggs": { "group_by_author": { "terms": { "field": "author.keyword" } } } } ``` --- ## Aggregations Without results: ``` GET /library/_search { "size": 0, "aggs": { "group_by_author": { "terms": { "field": "author.keyword" } } } } ``` -- This is similar in SQL: `SELECT author, COUNT(\*) FROM library GROUP BY author ORDER BY COUNT(\*) DESC` --- ## Aggregations * An aggregation is like a function that builds a result set over a group of documents * You can get numeric aggregations like `min`, `max`, `avg`, `sum`, `range` that are relatively easy to get out of SQL but are tough in a distributed environment --- ## Nodes, Clusters & Shards, Oh My! * A **cluster** is a group of 1+ servers, who index/search together. -- * A **node** is a single server, participating in the ES cluster. -- * A **shard** is a bucket of data within a node. This allows one index to be split among multiple nodes in one cluster. A node has one or more shards. -- * ES will manage finding data in the various shards via a **master node** when there are 2+ nodes in your cluster. --- ## Clusters <img src="images/clusterdiagram.png" style="height:500px !important;" /> --- ## Fault Tolerance * "health" and failure tolerance -- * Replicas distributed on different nodes in case of failures -- * Master node figures out which node has your data -- * Scale out — searches can be executed on replicas in parallel --- ## How Healty Is Our Cluster? ``` GET localhost:9200/_cat/health?v ``` -- **Green** - everything is good (cluster is fully functional) **Yellow** - all data is available but some replicas are not yet allocated (cluster is fully functional, but more vulnerable to data loss/corruption) **Red** - some data is not available for whatever reason (cluster is partially functional) --- # Let Someone Else Manage It Elasticsearch clusters are often set up on services such as: * AWS Hosted ES Service * Heroku's Bonsai service * Searchly (IBM) --- # ES Alternatives * **Stretch PostgreSQL or PostGIS before you reach for ES** * Apache Solr (also built on Lucene) * Sphinx * RavenDB (MS)